You don’t need a 6-month project to start capturing value with AI. Most SMEs and scale-ups already have "pockets" of efficiency within their tools and processes, accessible in a few days or weeks… provided you know where to look.

This express AI audit checklist is designed to identify realistic quick wins (automation, assistance, information extraction, light integration) and avoid false good ideas (gimmicky POCs, overly risky topics, impossible-to-use data).

What is an AI audit "quick win" (and what it isn’t) A quick win isn’t necessarily "technically simple." It is primarily a use case that ticks 4 boxes:

Measurable impact (time saved, tickets avoided, accelerated revenue, reduced errors)

Short integration into the existing workflow (CRM, helpdesk, Google Workspace/M365, Slack/Teams, ERP…)

Controlled risk (data, compliance, security, quality)

Rapid deployment (prototype in a few days, pilot in 2 to 4 weeks in many contexts)

Conversely, the following are rarely quick wins:

Replacing a core tool (ERP/CRM) "with an AI"

Automating a process that isn’t standardized

Deploying AI on sensitive data without a framework (contracts, health, HR) or guardrails

Criterion

Typical quick win

Long / risky bet

Data

Already available, low sensitivity, fairly clean

Scattered, sensitive, ungoverned

Usage

Repetitive and frequent

Rare, highly variable

Integration

In existing tools

New product, major change

Measurement

Easy KPI to instrument

Diffuse impact, complex attribution

The express checklist (45 to 90 minutes) to find your quick wins Objective: leave this mini-audit with 3 to 7 use cases ranked, each with an ROI hypothesis, a risk level, and a clear next step.

1) Frame the scope in one sentence Without framing, you collect ideas, not a plan.

Good format: "Reduce time spent on Y (function) by X%, without increasing risk Z, within 30 days."

Realistic examples:

Reduce support response time on simple requests, without exposing personal data

Accelerate sales qualification, without degrading info quality in the CRM

Decrease document processing time (quotes, invoices, orders), with human oversight

2) List 10 "low value" tasks that recur every week Here, no need to be exhaustive. You are looking for tasks that combine volume + repetition + friction .

Good signal: "We do it because we have to, not because it creates value."

Frequent examples in SMEs/scale-ups:

Summarizing meetings and producing actionable minutes

Searching for info in internal docs (Notion, Drive, Confluence)

Categorizing, routing, replying to recurring emails

Updating the CRM after calls / meetings

Extracting fields from PDFs (orders, invoices)

You want to know where AI can fit in fast. Take a sheet of paper and note:

"System" tools: CRM, helpdesk, ERP, billing, knowledge base

"Flow" tools: email, internal chat, forms, calendar

Where data is: Drive, Notion/Confluence, SQL bases, data warehouse

Who owns what: business owner + IT/data owner

Tip: a quick win is often a short integration rather than "yet another AI tool."

4) Identify manipulated data and classify risk Before even talking models, ask the question: what data passes through the AI?

Simple categories:

Public / non-sensitive : marketing content, public FAQs

Internal non-critical : internal procedures without personal data

Personal data (GDPR) : emails, tickets, CRM, HR

Confidential / strategic : pricing, contracts, finances, code

If you touch personal data, look at a minimum at:

Legal basis and minimization (GDPR)

Contract/DPA with the provider and retention rules

Logging and access control

To situate obligations, the European AI Act also formalizes a risk-based approach (it is not just a "tech" topic).

5) Write an ROI hypothesis (even a rough one) The ROI of a quick win must be proven. Do a simple calculation:

Minutes saved per occurrence

Occurrences per week

Loaded cost (or capacity freed)

Quality impact (errors avoided, reduced delay)

Example (simple): 8 minutes saved on 150 tickets/month = 1,200 minutes, i.e., 20 hours/month. Then, you apply a cost and compare it to the effort.

If you want to go further on measurement, you can rely on dedicated KPI logic (Impulse Lab has a complete guide on the subject: AI KPIs: measuring the impact on your company ).

6) Prioritize with an "Impact / Effort / Risk" scorecard Don’t discuss for 2 weeks. Score quickly, then test.

Dimension

Question

Score 1

Score 3

Score 5

Impact

Is the gain visible on an operational KPI?

Vague

Measurable

Critical

Frequency

How many times/week?

Rare

Regular

Daily

Effort

Integration and process changes

Heavy

Medium

Light

Data

Accessible and usable?

No

Partial

Yes

Risk

GDPR/security/business error

High

Medium

Low

Then choose 2 use cases :

1 "very safe" (low effort, low risk)

1 "more ambitious" (higher impact, reasonable effort)

7) Define the expected "next proof" A quick win advances through proofs, not opinions.

For each selected use case, note:

A pilot scope (team, volume, duration)

A main KPI (north star)

2 guardrails (quality, compliance, cost)

A clear stop condition (if it doesn’t work)

For a structured testing approach, you can also draw inspiration from a rapid validation protocol (see: Enterprise AI Test: simple protocol to validate your ideas ).

The most frequent quick wins (and what to check beforehand) The idea isn’t to copy a "trendy" use case, but to spot those that fit into your operational reality.

Function

Frequent AI quick win

Necessary data

Vigilance point

Support

Assisted responses + ticket routing

Ticket history, knowledge base

Confidentiality, hallucinations, tone

Sales

Call minutes + CRM update

Recordings/calls, CRM fields

Consent, summary quality

Ops

Field extraction from PDF/email

Recurring docs, stable formats

Error rate, human oversight

Finance

Pre-categorization and document checking

Invoices, accounting rules

Sensitive data, traceability

HR

Internal FAQ + drafting assistance

Internal policies, docs

Personal data, access

Marketing

Content reuse + briefs

Existing content, ICP

Brand consistency, validation

On "support" cases, Impulse Lab has already detailed the impacts and deployment patterns (if this is your priority: Chatbot for SMEs: use cases that pay off ).

"Ready-to-print" mini-checklist (to tick during the audit) To check

Simple question

If "no"

Clear problem

Can the task be described in 1 sentence?

Reformulate, otherwise stop

Baseline

Do we currently measure time/cost/quality?

Measure for 1 week

Data

Do we know where the data is and who owns it?

Appoint an owner

Integration

Can it be inserted into the current tool?

Review the approach

Validation

Who validates quality (business side)?

Appoint a reviewer

Compliance

Is the data sensitive?

Define guardrails

Adoption

Who will use it, when, and why?

Add a change management step

Cost

Do we have a monthly cost ceiling?

Set a budget guardrail

The 5 signals indicating it’s not a quick win When these signals appear, you aren’t "blocked," you are just on a topic that deserves a more complete audit.

Unfindable or inconsistent data If you spend more time looking for data than testing the use case, the order of priorities is clear: governance, access, quality.

Non-standardized process AI amplifies a process, it doesn’t replace it. If everyone does it "their own way," start by standardizing the minimum.

High legal or reputational risk As soon as you touch sensitive decisions (HR, credit, compliance, health), you need a more robust framework, not a quick fix.

No integration, only a demo An AI "on the side" creates friction. Without integration, adoption drops and so does ROI.

No one is owner A quick win without an owner becomes a ghost POC.

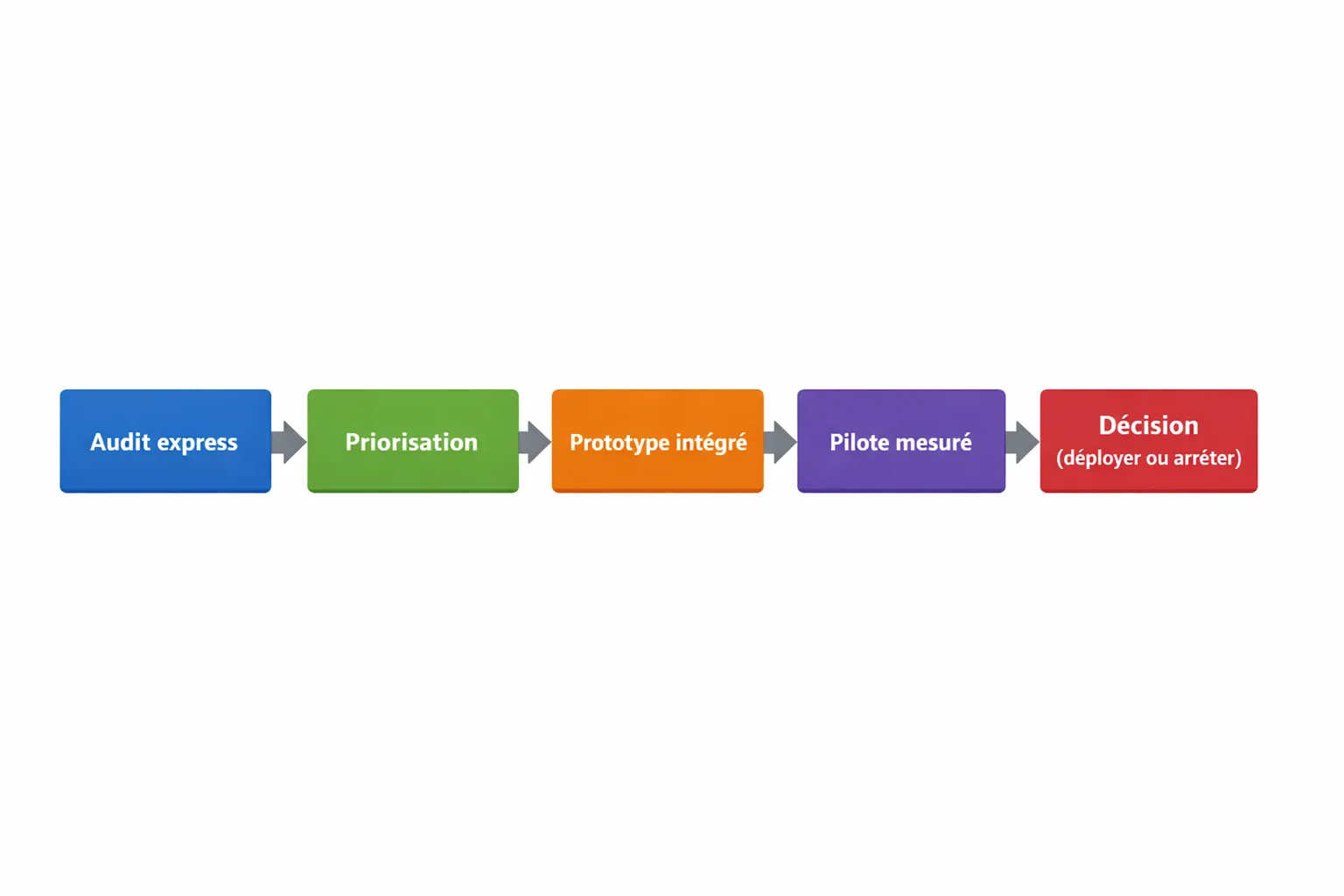

After the checklist: a simple 10-day plan to take action D1 to D2: choose 2 use cases and freeze KPIs Even if your KPIs are imperfect at the start, they prevent you from making up stories.

D3 to D6: integrated prototype (not a mockup) Priority on: authentication, access rights, logs, controlled cost, and insertion into the daily tool.

D7 to D10: short pilot, measurement, decision Decide based on a scorecard:

Operational gain

Quality (error rate, user feedback)

Risk (incidents, data)

Cost (predictable, capped)

Then: either you industrialize, or you cut it cleanly.

When to switch from an express checklist to a full AI audit If you have identified quick wins but also see cross-functional topics (data, security, stack, governance), a checklist is no longer enough.

In this case, a more structured AI audit allows mapping opportunities and risks and producing a roadmap. For understanding this format, you can read: Strategic AI Audit: mapping risks and opportunities .

Need an external look to secure your quick wins (without slowing down delivery) If you want to:

Identify 5 to 10 truly profitable AI opportunities quickly

Prioritize with ROI and risk logic (GDPR, security, AI Act)

Build a pilot integrated into your tools, delivered in short iterations

Impulse Lab accompanies SMEs and scale-ups via AI audits , custom development , automation , integration , and adoption training .

You can start with a scoping discussion via the site: impulselab.ai .